AVASAG (BMBF, 2020-2023) focuses on two essential aspects for automatic sign animation for 3D avatars: 1. correctly and comprehensive input annotation – i.e., text input – as required for training data for machine learning (ML), 2. definition of an extended intermediate representation of signs, multi-modal signal streams, or MMS. They include imprecise hand shapes, hand positions, and positioning, pauses, dynamics and facial expressions, detailed body and facial animation, their transitions, and context information that goes beyond the traditional gloss annotation. Instances of MMS representation are used to control a 3D avatar’s animations (posture, gesture, facial) in such a realistic and high-quality way that the meaning is understood.

AVASAG has the following research and development topics:

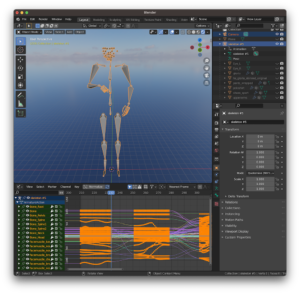

An automated recording system (hardware and software) for the acquisition of individual signs (3D signs), with which a 3D sign language avatar can be controlled. For this purpose, it is necessary to simultaneously and precisely capture the body movements, finger movements, and facial movements of a person and to transfer them to a 3D sign avatar.

(Semi-)automated annotation methods for fast generation of data pairs (sentence and MMS) by using user interfaces for cooperative ML and interaction and visualization techniques: a) to increase the transparency of machine annotation processes and b) for any necessary correction of machine-generated annotations.

The conception of a novel abstract sign representation, multi-modal sign streams (MMS), which on the one hand, allows to model temporally and spatially correlating sign aspects in more detail and, on the other hand, allows inserting corrections in the animation of sign aspects at runtime.

Generation of data for machine learning and their publication for use in future application-oriented research in the field of sign languages.

Machine Learning (ML) from text to MMS using deep learning (above) on neural networks – the technology that has brought about significant breakthroughs in machine translation between textual languages in recent years; also an authoring tool for training sign language and translation, so that content and sign language can be easily extended automatically and easily created and edited. The authoring tool is especially necessary for the adaptation/fine-tuning of new application domains.

Use of realistic 3D animation of face, head, body, and extremities for communication. Especially important is a representation of time and simultaneity, touch, and other body activities (e.g., inflating the cheeks).

Technical and perceptual evaluation of the achieved sign animation and the required intermediate steps.

Links:

- Official AVASAG Project Page: https://avasag.de

- MMS Player software: https://github.com/DFKI-SignLanguage/MMS-Player