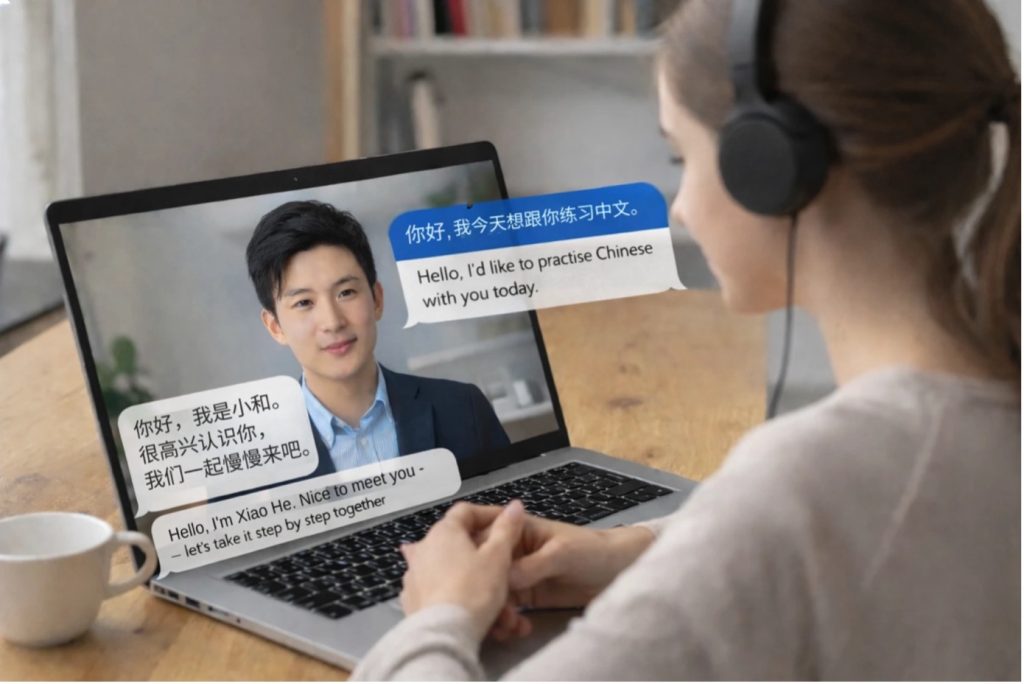

The CLIKK research project (Chinese language teaching with large language models and a focus on intercultural communication skills) started place in October 2025. It investigates how AI-based virtual agents can support the acquisition of intercultural skills in Chinese language teaching.

CONFIDENCE is developing an interactive system that uses AI, sensors, and social-interactive agents to generate personalized content to help children ages eight to twelve boost their self-esteem and reduce bullying.

Skills4Kids addresses obesity in children and adolescents, is developing a digital therapy and diagnostic app, which targets emotional dysregulation, uses a social-digital AI assistance system to provide early, low-threshold access to evidence-based strategies derived from the START program. The goal is to promote emotional resilience and healthy habits in a wide range of young people, ultimately seeking approval for insurance reimbursement.

The NEARBY project aims to develop variability-free BCI systems for use outside the laboratory. To this end, a comprehensive database will be created in which EEG data from various test subjects is recorded over longer periods of time under different conditions and in different environments.The aim is to better understand the variability of the data under different conditions and to develop new algorithms that can reduce or even completely suppress this variability.

HAIKU – It is essential both for safe operations, and for society in general, that the people who currently keep aviation so safe can work with, train and supervise these AI systems, and that future autonomous AI systems make judgements and decisions that would be acceptable to humans. HAIKU will pave the way for human-centric-AI by developing new AI-based ‘Digital Assistants’, and associated Human-AI Teaming practices, guidance and assurance processes, via the exploration of interactive AI prototypes in a wide range of aviation contexts.

Past Projects

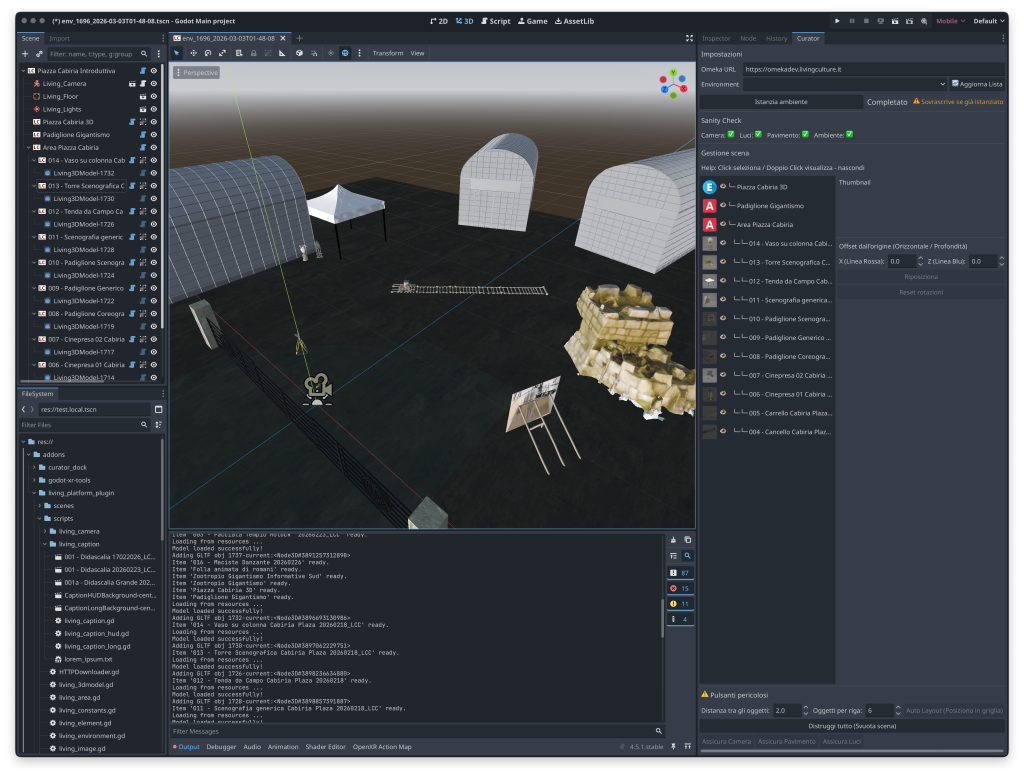

Platform Living Culture fills the gap between research, documentation and engagement in cultural heritage initiatives. It sets up a digital ecosystem for knowledge encoding, immersive and interactive display, and thematic perspectives, to support investigation and communication.

BIGEKO – Sign Language Recognition Model for bidirectional translation of sign language and text including emotional information.

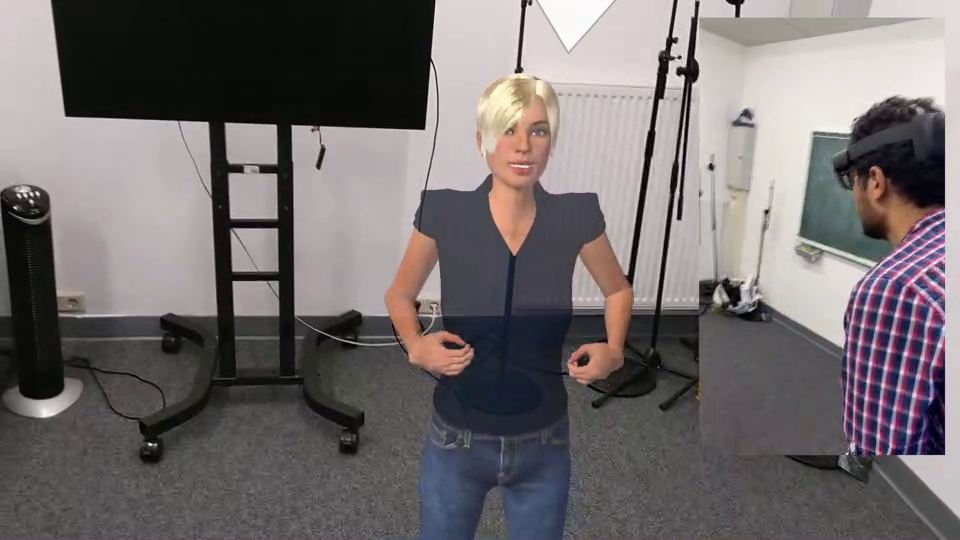

MITHOS – Interactive Training in Mixed-Reality to Dealing with Heterogeneity and Conflict Situations at School. The goal of MITHOS is to support a sustainable acquisition of social skills for the classroom through mixed-reality. MITHOS provides realistic socio-emotional training scenarios, in which teachers and teacher trainees can prepare better for the challenges when facing conflicts in heterogeneous groups in class.

The main goal of the EXPECT project is the development of an adaptive, self-learning platform for human-robot collaboration that not only enables various types of active interaction, but is also capable of inferring human intention from multimodal data (gestures, speech, eye movements, and brain activity). In the project, methods for automated labeling and joint evaluation of multimodal human data will be developed and evaluated in test scenarios.

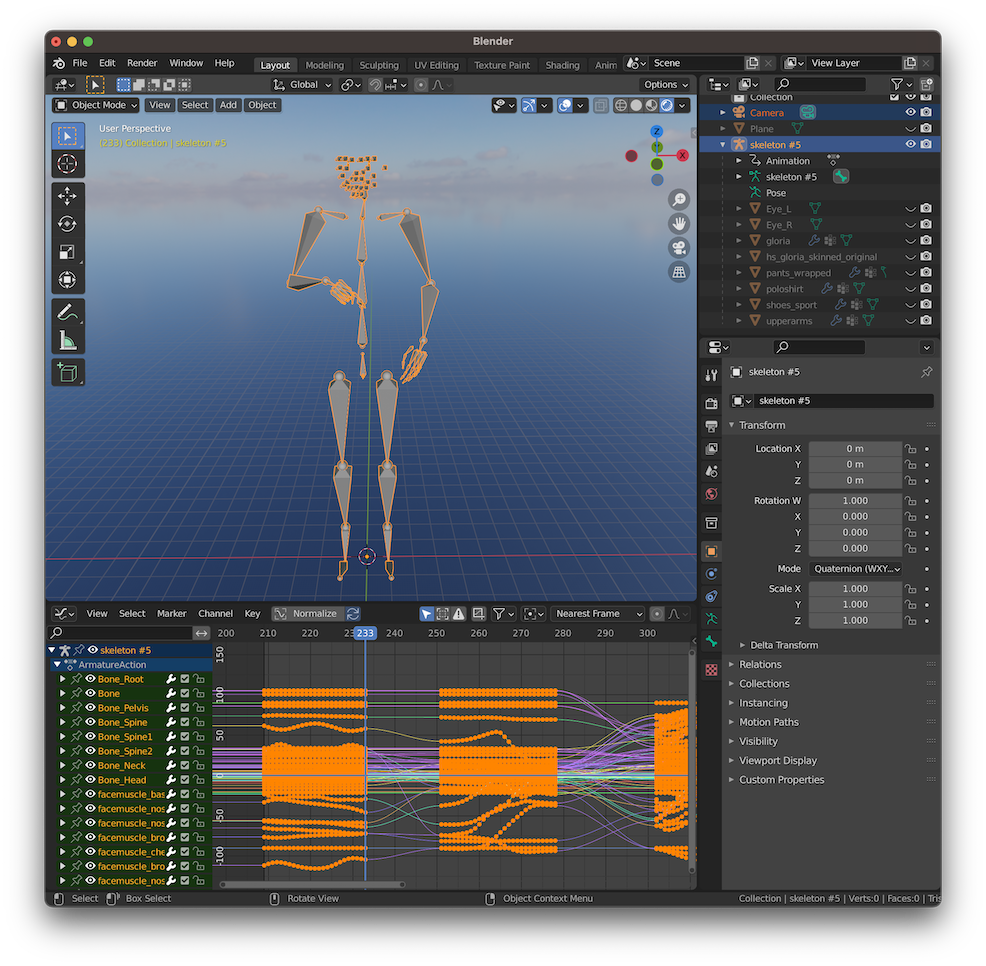

AVASAG focuses on two essential aspects for automatic sign animation for 3D avatars: 1. correctly and comprehensive input annotation – i.e., text input – as required for training data for machine learning (ML), 2. definition of an extended intermediate representation of signs, multi-modal signal streams, or MMS.

The research project “SignReality – Extended Reality for Sign Language Translation” aims to develop a model and an augmented reality (AR) application that visualizes an animated interpreter for German Sign Language (DGS).

The MePheSTO vision is to break the scientific ground for the next generation of precision Psychiatry through social interaction analysis based on artificial intelligence methods.